Development documentation

The only correct behaviour is for lleaves to behave exactly like LightGBM.

The best way to figure out LightGBM’s behaviour is to run and read their code.

LightGBM’s model serialization

The serialization format isn’t documented. The information here was compiled by reading through LightGBM’s codebase.

Decision Types

The encoding of the decision types is fairly straightforward and determined by masks. Three things are encoded:

Categorical vs Numerical node.

Default left: This determines which direction (left or right) is taken when the missing type is encountered.

Missing type:

NaN,NoneorZero.

Branch prediction

The internal_count variable stores for each node of the tree, how many datapoints of the dataset pass through it.

For the root node this is equal to len(dataset).

The respective information for tree leaves is saved in leaf_count.

This could be used to add branch prediction hints to the LLVM compiler.

Missing Values

For dealing with missing values it’s best to look at the LightGBM code.

Example: If the missing type is None and a NaN is encountered, the NaN gets mapped to 0.0.

This means for this node NaN will behave like 0.0, irrespective of the value of default left.

In lleaves all combinations of decision type, default left and missing type are tested against LightGBM.

Categorical features

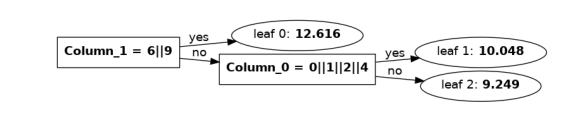

In LightGBM, categoricals are represented as non-negative integers smaller than 2^31-1. A tree with categoricals looks like this in the model.txt:

Tree=0

num_leaves=3

num_cat=2

split_feature=1 0

split_gain=88471.5 4720.24

threshold=0 1

decision_type=1 1

left_child=-1 -2

right_child=1 -3

leaf_value=12.616231057968633 10.048276920678525 9.2489478721549396

leaf_weight=106 120 274

leaf_count=106 120 274

internal_value=10.182 9.48306

internal_weight=0 394

internal_count=500 394

cat_boundaries=0 1 2

cat_threshold=576 23

is_linear=0

shrinkage=1

To make comparing against many categoricals faster, LightGBM uses vectors of bits which are stored in as

32-bit integers in cat_threshold.

Looking at the root node’s threshold: 576 = 2^6 + 2^9.

Therefore categories 6 and 9 go left, all others go right.

The alternative would be to implement this as two nodes with a X==6? and a X==9? condition, which is how ONNX does it.

When the largest category appearing in a node is bigger than 32 we need more integers to store the bits.

This is specified by the cat_boundaries.

For a categorical node its threshold is the index into the cat_boundaries-array.

The number of i32 bitvectors for a categorical node is given by cat_boundaries[threshold+1]-cat_boundaries[threshold].

An example from the model.txt of the airlines model:

cat_boundaries=0 1 2 10 19 27 36 45 54 63 70 79 84 93 94 102 110 118 126 135 136 142 151 160 165

The bitvectors of the first three categorical nodes are <1 x i32>, <1 x i32> and <8 x i32> long.

Multiclass prediction

Multiclass prediction works by basically fitting individual forests for each class, and then running a softmax across the outputs. So for 3 classes with 100 iterations LightGBM will generate 300 trees. The trees are saved in the model.txt in strides, like so:

tree 0 # (=class 0, tree 0)

tree 1 # (=class 1, tree 0)

tree 2 # (=class 2, tree 0)

tree 3 # (=class 0, tree 1)

tree 4 # (=class 1, tree 1)

...

Software Architecture Overview

lleaves.py: Provides the main class (Model) with the predict interface. Also handles the engine (LLVM execution environment) in a centralized way. The final compile step (LLVM IR ⇒ ASM) is performed here.compiler: this model ingests a model.txt and returns the optimized LLVM IR moduleast: Scans the model.txt, parses the content to an abstract-syntax tree.codegen: Takes the AST, optimizes it and emits LLVM IR.tree_compiler.py: Calls the other modules, runs compiler optimization passes.

LLVM IR Generation

To look at the IR generated by lleaves there are two useful environment variables:

Set

LLEAVES_PRINT_UNOPTIMIZED_IR=1to look at the LLVM IR generated bylleavesbefore any LLVM compiler optimization passes are run.Set

LLEAVES_PRINT_OPTIMIZED_IR=1to look at the final LLVM IR after the LLVM compiler optimization passes.Set

LLEAVES_PRINT_ASM=1to look at the assembly code produced by the compiler.

Interfacing with a Compiled Model in C++

To interface with the compiled model in C++, you need the following steps:

Generating Cache Files using Python.

If you already have a trained model file named lgbmModel.txt, the command to read and compile it may look like this.:

llvm_model = lleaves.Model(model_file="lgbmModel.txt")

llvm_model.compile(cache='./lleaves.o')

...

Modifying and Moving Relevant Files

The modifications were made primarily based on this section of code:

https://github.com/siboehm/lleaves/tree/master/benchmarks/c_benchMove

lleaves.oto the current directoryModify the

c_bench.cpp, like so:#include "c_bench.h" #include <vector> #include <iostream> int main() { std::vector<double> data = {8.81351540e+00, -2.74901880e-01, -4.78453119e-02, 2.25956985e+01, -2.75495538e-01, -9.12007856e-02, -4.78453119e-02, 1.88485949e+00, 1.88485949e+00, 1.64226175e-03, 1.64226175e-03}; double result; forest_root(data.data(), &result, 0, 1); std::cout << "Result: " << result << std::endl; return 0; } ...

Due to C++ name mangling, it’s important to retain the

extern Cfunction declaration forforest_rootin the header. Else the linker will fail.Modify the

CMakeLists, like so:cmake_minimum_required(VERSION 3.19) project(c_bench) set(CMAKE_CXX_STANDARD 11) add_executable(c_bench c_bench.cpp) add_dependencies(c_bench run) target_link_libraries(c_bench ${CMAKE_CURRENT_SOURCE_DIR}/lleaves.o) ...

Build the code

If you are using CMake, you can run the following commands to build and run the program:

cmake .. && make ./c_bench ...

If you are using g++, you can run the following commands to build and run the program:

g++ c_bench.cpp lleaves.o -o c_bench ./c_bench ...